I decided to turn my attention to the various ways that the new Arcventure will be played. The current aim is to have versions for PC, Mobile Devices, PCVR and Oculus (or similar) with extra materials being available in AR format for mobile devices. One of the problems covering all of these platforms is that the standard PC game format of keyboard and mouse control will not be suitable for mobile or VR platforms. My solution to this is to design the user interaction with a view to it working in VR. The VR marketplace is moving quite quickly and significant changes in hardware may occur before ArcVenture gets out into the wild but I am working with what seems to be a popular set of platforms – PCVR and Oculus (Meta) .

I decided to turn my attention to the various ways that the new Arcventure will be played. The current aim is to have versions for PC, Mobile Devices, PCVR and Oculus (or similar) with extra materials being available in AR format for mobile devices. One of the problems covering all of these platforms is that the standard PC game format of keyboard and mouse control will not be suitable for mobile or VR platforms. My solution to this is to design the user interaction with a view to it working in VR. The VR marketplace is moving quite quickly and significant changes in hardware may occur before ArcVenture gets out into the wild but I am working with what seems to be a popular set of platforms – PCVR and Oculus (Meta) .

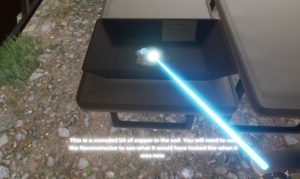

In VR we provide a hand controller to point at objects and select them. The head tracking means that although we may be moving forward, we don’t necessarily need to be facing that way. We also have the complication that movement itself can cause the player to feel travel sick if not done carefully and may need to be replaced with a ‘teleport’ system for those who are badly affected. For PC flat screen users we would use the same selection system but highlight the object directly in front of us if it is selectable and use the mouse to control turning around. We can also replicate the mouse controls for mobile devices with on-screen joystick widgets.

In VR we provide a hand controller to point at objects and select them. The head tracking means that although we may be moving forward, we don’t necessarily need to be facing that way. We also have the complication that movement itself can cause the player to feel travel sick if not done carefully and may need to be replaced with a ‘teleport’ system for those who are badly affected. For PC flat screen users we would use the same selection system but highlight the object directly in front of us if it is selectable and use the mouse to control turning around. We can also replicate the mouse controls for mobile devices with on-screen joystick widgets.

We also have to think about how information is displayed to the user. On a normal flat screen game we can display menus and text prompts as overlays on the screen so that they appear on top of any 3D objects but in VR, the player is immersed in a 3D environment and overlays don’t work so well so every text prompt and menu has to be displayed as an object in the environment. I have found so far that the best way to make all of these things work best across all platforms is to design for the VR environment and then work backwards to the flat screen alternatives.